7. The Motte, The Bailey and The Inevitable Conclusion

Some reading earlier issues may have wondered, yea people and corporations are changing stories and manipulating small parts of our daily activities, so what? The stakes may seem really small and the effect on the population perhaps even smaller still. But the proper foundations had to be laid down so that a proper frame could be built.

There’s been some hullabaloo about China’s Social Credit System lately, ignited by the proposed banning of TikTok in the US.

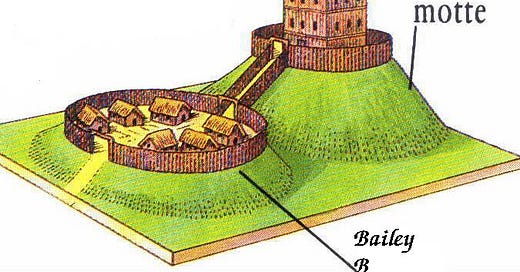

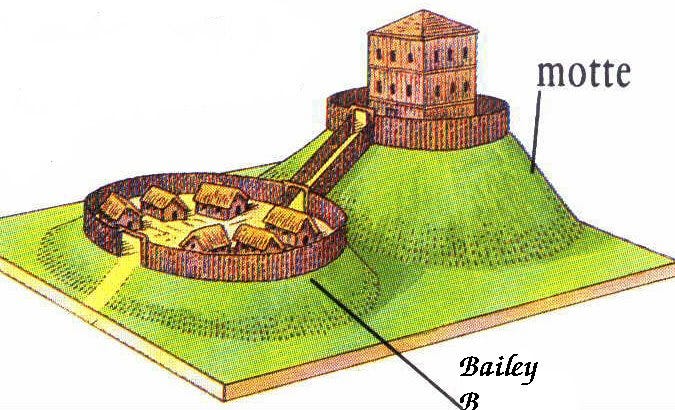

Now consider the Motte and Bailey:

You live in the Motte, you let the Bailey get attacked. They’re related, but you’re willing to sacrifice a lesser piece as you defend the stronger, more relevant piece. Another way to think about it is a preemptive straw man you let your opponent have while you do the real work out in the open, undisturbed by the attacks on the Bailey.

Bailey: Data collection and tracking invades our privacy! (TRUE!)

Motte: It’s not so much about privacy. Data collection and tracking is used to set up, track, and validate behavioral changes when an input is introduced to the population.

Another one:

Bailey: The CCP’s Social Credit Score isn’t real (TRUE!)

Motte: It doesn’t matter whether the “Score” is real or not.

No one serious is actually interested in the existence of "The Score" of the CCP's social credit system, or "The Number" that deems some person worthy or not. Nor is it the registration of some ID that is attached to a person or a business that's the problem. It doesn't matter if they collect data on everyone and everyone's misdeeds are calculated and attached to these IDs. Everyone's too focused on the punishment aspect of keeping track and surveillance or data leaks.

The real problem, and I stress this, is that the data is used to manipulate, track, and set up conditions on which the CCP, or anyone with a significant amount of data, can use to see how behavior changes when inputs are introduced. The data is used to track and validate compliance. That is, the data allows anyone with sufficient access to it mechanisms upon which they are able to set up mass-scale behavioral experiments towards an intended outcome. The problem here then are the outcomes which people are leading you towards. They are opaque and unknown.

Real example: What do you think Twitter algorithms do? They are employed to subtly change your behavior without your explicit knowledge. They algorithmically manipulate your feed towards an intended outcome. For example: do you read more or less Twitter?

You can make these outcomes biconditional: Do you read more or less Twitter that confirms certain political views? Those who hold the keys to the data can figure that out very quickly and reiterate repeatedly.

Now, imagine a team of tens of thousands of people dedicated specifically for this purpose. Will they make changes to your behavior and beliefs offline? Yes!

Let's say that instead of a single website (twitter), an entire nation decides to control what you see and read (CCP confirmed of doing this) but also dictates what behavioral patterns they want to enforce with it (CCP as a moral authority).

Bailey: The SCS doesn’t punish people (TRUE!)

Motte: The SCS is not supposed to be a punishment.

The SCS is predominantly a deterrent, not a punishment. It doesn't WANT to punish you. It wants your cooperation. Its opacity means you don't know what the rules of the game are. Ignorance of the rules forces people to play it safe.

The problem is that the game is rigged. The dealer knows what the rules are, they created the rules themselves. Moreover, they can change the rules without you knowing.

People who are calling this "Black Mirror" have it wrong. It isn't someone's life falling to pieces in the course of a 45 minute episode. It is a gentle guiding hand that firmly presses you towards an unknown direction every day of your life.

Almost no person I've read that reports on CCP, SCS, or algorithm ethics seems to truly understand how these algorithms work. And all of the algorithm experts don't have a single clue on science, philosophy of science, or science ethics. This is a gigantic issue.

Why do I stress agency and philosophy in the face of such an issue? Because the tides are moving in this direction and barring some human revolution I won't be holding my breath for, the only way to swim against the current is to grow your own faculties of agency and attention.

The algorithms are designed to become epistemic agents. They are designed to tell you what is true - what to buy, what to read, what to believe. They are all designed to nudge populations towards compliance to some output metric. And these metrics are completely opaque.

The CCP’s Social Credit System is a real and present example to how seemingly small concessions over time, driven by an overarching and targeted outcome has the capacity to dramatically change and control attitudes and behaviors of a nation with well over a billion in population. The net results are by no means small in either total effect nor in numbers.

The core issue isn’t whether people are being punished by the SCS. SCS works best as a nudge or a deterrent to unwanted behavior, not as punishment. It is only when people are not deterred by it that the SCS resorts to punishment. It’s subtle by design. Its goal is to shape behavior — compliance — not reverse behavior in the form of restriction of behavior or rights. Compliance is easier, more effective, and less costly than punishing.

While the “operating systems” in North American countries are slightly different than in China — systems in North America do not follow an overarching mandate set by the State party — though the same systems, protocols, and algorithms are in use, not by the government, but by increasingly monopolistic technology companies who increasingly want to shape the tone and boundaries of national conversation and morality at large.

It is also not about the existence of an actual number that is the actual “social credit score.”

Furthermore, North American tech companies indeed sell the outcomes of their validated datasets and experiments as well as technology to CCP, who is more than happy to incorporate them into their national Social Credit System. And of course, technology often transfers in both directions.

University of Pennsylvania philosopher Lisa Miracchi offers a criteria for "Artificial Minded Intelligence": How might artificial processes give mind to minded intelligence, both in the general case and in cases of specific mental kinds, such as perception, knowledge, language comprehension, and goal-directed action?

I propose an inversion. A desiderata for new study towards agency: How do artificial processes take away from minded intelligence, such as persons, both in the general case and in cases of specific mental kinds, such as perception, knowledge, language comprehension, and goal-directed action?